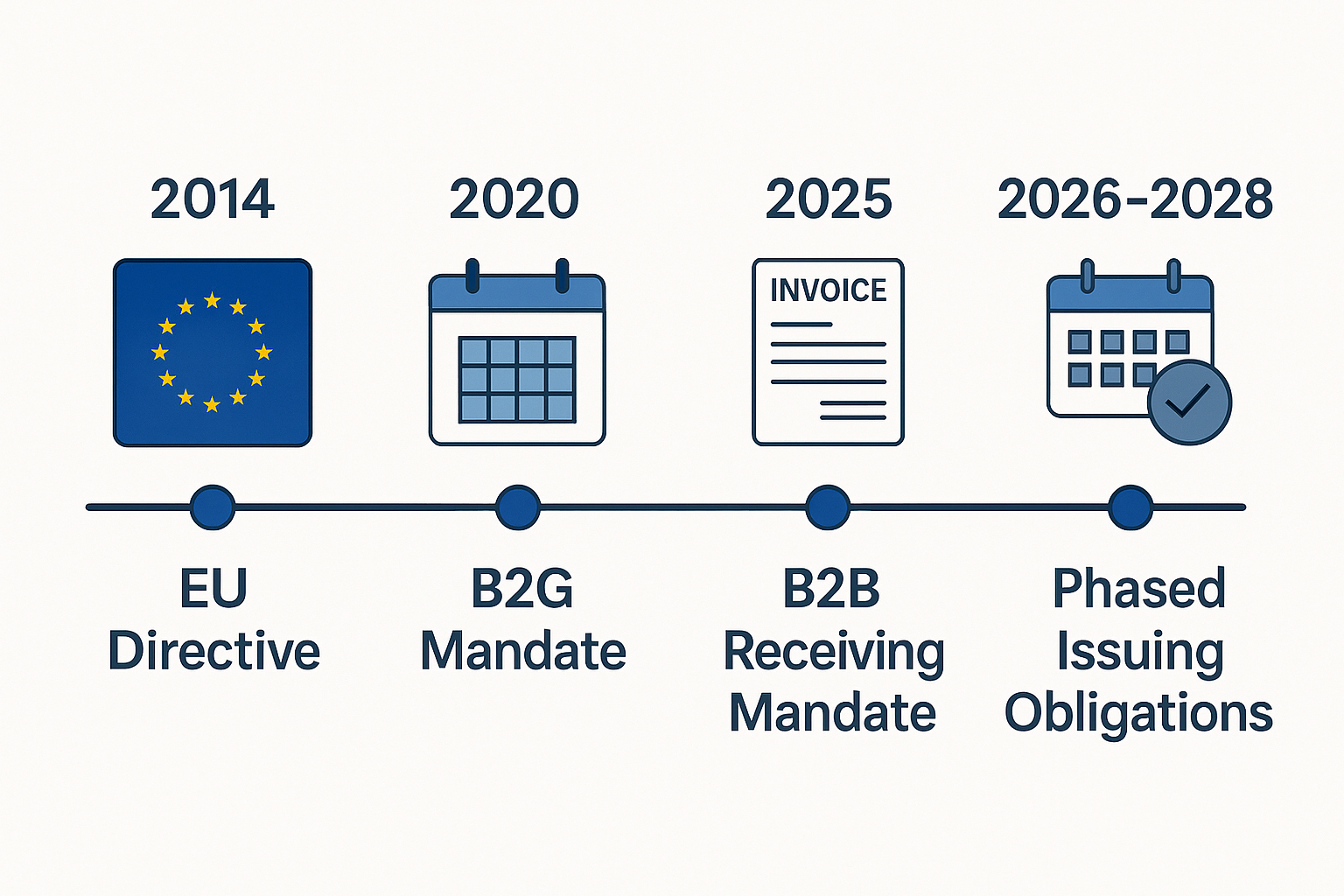

In Parts 1 and 2 of our series on the challenges of electronic invoices in Germany, we have

- explored how Germany’s e-invoicing mandate has highlighted the critical need for robust, reliable software that can handle complex data structures like XRechnung and ZUGFeRD.

- demonstrated how we exposed hidden bugs in the Mustang e-invoicing tool, the XRechnung standard, and the European norm for e-invoices, with schema-driven synthetic test data.

- advocated for more thorough testing of critical software processing complex data with high-quality test data to find these bugs before they disrupt business operations.

The Bigger Picture: Beyond E-Invoicing

The lessons-learned extend far beyond e-invoicing. Many industries face similar challenges: strict data standards, complex schemas, and severe consequences for data errors.

- Financial services: Payment processing, electronic banking statements, and insurance claims often rely on formats like ISO 20022, SWIFT messages, or SEPA direct debit files. A single bug in these systems could lead to payment failures or regulatory fines. Banks and fintechs must rigorously test against all possible transaction scenarios to prevent costly errors.

- Healthcare: Health data exchange standards like HL7 FHIR or DICOM for medical imaging are highly structured and privacy-sensitive. Errors in processing these can be life-threatening or violate data protection laws such as GDPR or HIPAA. Testing with synthetic patient data is crucial to ensure that systems handle both common and rare edge cases effectively.

- Supply chain and Electronic Data Interchange: Global supply chains rely on EDI messages (e.g., EDIFACT, UBL) for orders, invoices, and shipment notices. Misinterpreting an order could halt production. Each trading partner might have slight variations, making robust testing with diverse synthetic orders and shipments vital to preventing logistical disruptions.

- Public sector and legal: Beyond invoices, government mandates cover reports like tax filings, compliance documents, and statistical reports. Given the legal implications of data errors, software in these areas must be bulletproof.

This list is not exhaustive! In all these domains, relying on too small or superficial test data sets, like a handful of “real” invoices, is extremely risky. The complexity of standards requires systematic, automated, and comprehensive testing. This is where customizable test data, tailored to exact specifications and business needs, becomes invaluable.

The Power of Schema-Driven Test Data

Traditional test data generation often starts from production data (after the removal of sensitive information) or existing, small test datasets consisting of a few examples. InputLab’s schema-driven approach flips this concept: it uses the explicit rules and constraints of the data model to generate test cases. This method offers several advantages:

- Completeness: Our holistic data schema model lets us systematically explore the data format, making sure that every field, every optional path, and every rule is exercised.

- Edge Case Coverage: Using both schema and user-defined constraints, we generate not just typical data, but also very nasty inputs containing, e.g., extreme values: maximum lengths, boundary dates and numbers, unusual currency conversions, attack values and the like. Our algorithmic approach systematically covers scenarios human testers might overlook.

- Privacy and Compliance: Since the data is synthetic, it contains no real personal data. This eliminates GDPR risks, making the data safe to use in testing environments, share with vendors, or include in bug reports.

- Customizable and Reproducible: Need more test cases? Generate 1000 more overnight. Found a bug in a specific scenario? Tweak the data generator to produce variations of that scenario to ensure the fix works across similar cases. Hand-curated datasets cannot keep up with this level of scalability.

Let’s take a look at a scenario where failures become expensive fast: Banking! A fintech develops a new system for processing wire transfers. Using schema-driven synthetic data, they can simulate transactions from 200 countries, with every possible currency and odd edge cases (e.g., extremely large amounts, non-ASCII characters) before the system ever goes live. The development team can generate millions of transactions to test performance, functionality, and data integrity. This approach helps catch bugs early and gives them—justified!—confidence that “we’ve seen it all” during QA.

Can’t We Use AI for That?

Schema-based test data generation is not the only alternative to writing test data by hand or scraping it from production data. If you’ve come this far in the article, you might have wondered if one can’t use AI (neural networks, LLMs like ChatGPT) for that job. And yes, you can! There are tools for learning AI-based models from production data, and it’s absolutely possible to use ChatGPT with a carefully designed prompt including a few examples to come up with very realistic test data for many use cases. Indeed, realism is the strength of production data and the AI-based approaches. In every other aspect, schema-based generation outperforms these alternatives. Schema-based test data generation

- guarantees absolute GDPR-compliance and protects your sensitive production data.

- covers negative and edge cases systematically, not just by accident.

- gives you full control over the generated data.

- works for use cases where production data is not yet available or incompatible.

- only produces valid data (if you don’t tell it to include invalid examples!), whereas ChatGPT can lose track of format requirements.

- provides great levels of reproducibility: Your specification plus a fixed seed value lets you have the same data every time. Great for legal documentation requirements!

Shift-Left Testing Enabled by Test-Data-as-a-Service

Modern software quality assurance advocates for “Shift-Left” testing, meaning testing early and often in the development lifecycle. Readily available synthetic test data supports this approach in several ways:

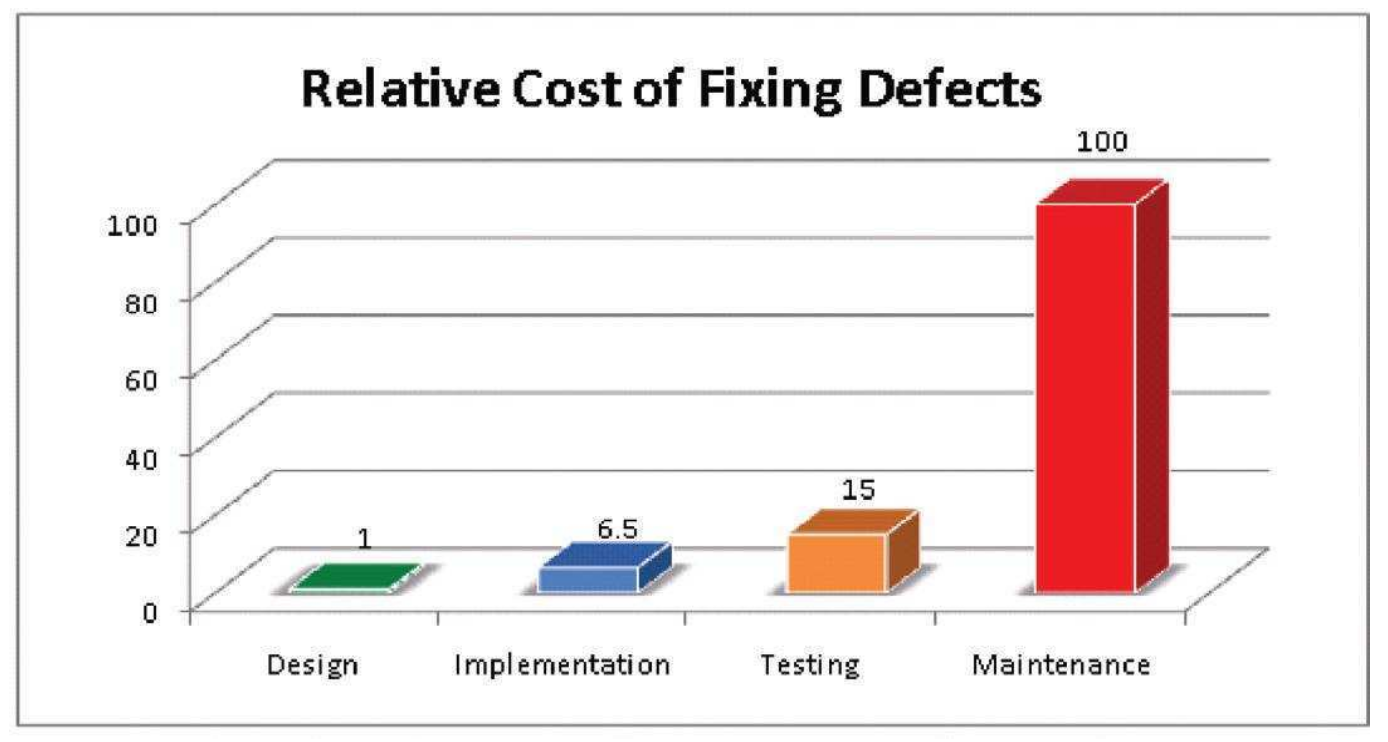

- With diverse datasets, developers can conduct more thorough integration testing, identifying issues early and reducing costly fixes later.

- Automated continuous integration pipelines can generate fresh data sets every time a new build is created. Thus, you can run comprehensive tests and boost your software quality within hours rather than weeks.

- Shift-left is not just about functionality, but also about performance. Schema-driven data can easily be scaled to large volumes, enabling realistic load testing early on. Thus, teams can assess how systems handle extreme volumes without compromising real user data.

- Many compliance-heavy processes involve multiple systems (e.g., an invoice proceeds from the supplier’s system to the clearing platform to the buyer’s system). Synthetic data can be shared across teams to simulate these end-to-end flows, enabling coordinated testing.

At InputLab, we’re currently building a comprehensive Test-Data-as-a-Service (TDaaS) platform that delivers on-demand synthetic test data tailored to each client’s needs. Here’s why it makes a difference for organizations:

- Eliminates In-House Complexity: Organizations no longer need to develop deep expertise on every data standard. InputLab’s technology can handle a wide range of specifications, allowing teams to focus on development rather than data preparation.

- Customizable for Specific Scenarios: Users can request highly specific test data, like “500 XRechnung invoices that all include a discount and use three specific tax rates:” ideal for targeted feature testing or compliance validation.

- Seamless API Integration: Developers can easily integrate test data into automated testing pipelines via APIs, allowing for continuous testing with freshly generated datasets.

- Always Up-to-Date: As standards evolve (e.g., new versions of ZUGFeRD or changes in regulations), existing data is no longer valid. InputLab’s data management system ensures that generated data always aligns with the latest compliance requirements.

Early Testing with Rich Data: A Smart Investment

Crucially, early testing with rich data reduces bugs in production. Studies and industry experience have shown that fixing a defect caught in production costs 10x or 100x as much as during production (considering hotfixes, potential legal issues, and damage control).1,2 In compliance-heavy environments, that multiplier can be even higher due to fines and reputational damage. Investing in high-quality test data and thorough testing is not just a sound technical strategy. It is a smart business and risk-management decision.

A Call to Action for Reliable Software

In this series, we’ve explored how comprehensive, schema-driven test data can expose hidden bugs, mitigate regulatory risks, and ensure reliable software in compliance-heavy industries. As digital transformation accelerates, the cost of software errors increases. Data-driven testing can help prevent these costly errors.

InputLab’s mission is to empower organizations with the tools and data they need to test thoroughly and confidently. Whether it’s ensuring e-invoice compliance, validating financial transactions, or safeguarding patient data, the same principles apply:

Test early. Test comprehensively. And test with data that truly covers all possible scenarios.

Ready to fortify your software against unexpected failures?

Contact InputLab to learn how synthetic test data can strengthen your testing strategy, mitigate risk, and elevate software quality across all domains. And, ultimately, increase business profits and have a good impact on our society by improving the IT systems we more and more depend on.

- Dawson, Maurice, et al. „Integrating software assurance into the software development life cycle (SDLC).“ Journal of Information Systems Technology and Planning 3.6 (2010): 49-53. ↩︎

- Gallaher, M. P., and B. M. Kropp. „The economic impacts of inadequate infrastructure for software testing.“ National Institute of Standards & Technology Planning Report (2002): 02-03. ↩︎